代码链接:https://github.com/WongKinYiu/yolov7

① 先看结果

Class Images Labels P R mAP@.5 mAP@.5:.95: 0%| | 0/8 [00:00<?, ?it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 12%|█▎ | 1/8 [00:00<00:03, 1.84it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 25%|██▌ | 2/8 [00:01<00:04, 1.49it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 38%|███▊ | 3/8 [00:02<00:03, 1.36it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 50%|█████ | 4/8 [00:03<00:03, 1.33it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 62%|██████▎ | 5/8 [00:03<00:02, 1.45it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 75%|███████▌ | 6/8 [00:04<00:01, 1.57it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 88%|████████▊ | 7/8 [00:04<00:00, 1.67it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 100%|██████████| 8/8 [00:05<00:00, 1.81it/s] Class Images Labels P R mAP@.5 mAP@.5:.95: 100%|██████████ 8/8 [00:05<00:00, 1.54it/s] all 188 219 0.932 0.881 0.904 0.383 300 epochs completed in 9.874 hours. Optimizer stripped from runs/train/exp3/weights/last.pt, 74.8MB Optimizer stripped from runs/train/exp3/weights/best.pt, 74.8MB 结论: yolov5 yolov6 yolov7 我使用了一模一样的数据集 detect后 反正Yolov7效果最差 暂时还不知道为何…很多漏检 yolov6基本只有俩漏检 大概1000张数据集的量

② 环境

官方写了个requirements 但是我用的是yolov5一样的环境 没问题

③ 数据集

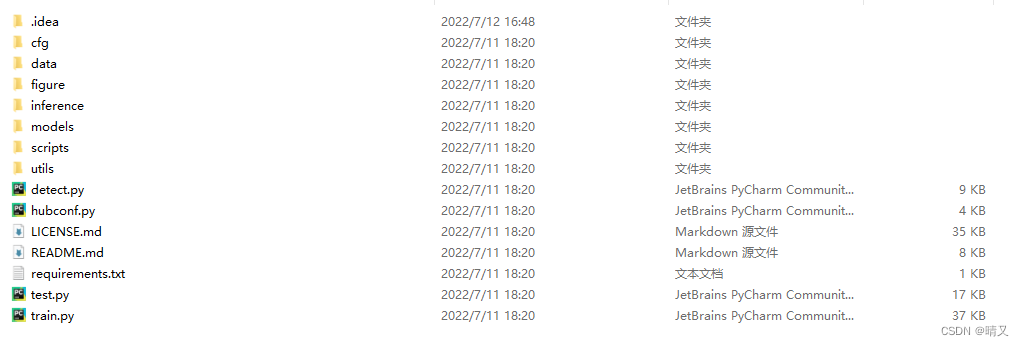

先看下代码下下来的结构  数据集放在data下 原来data下:

数据集放在data下 原来data下:

放完:

1.训练集

train下:

2.验证集

val下: 这俩cache文件不用管,只要自己的数据集放的正确后续会自己生成

3.数据集结构

images下正常放图片 labels下放标签,txt文件 数据集结束了

③ 训练

1.修改coco.yaml

原:

# COCO 2017 dataset http://cocodataset.org

# download command/URL (optional)

download: bash ./scripts/get_coco.sh

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

train: ./coco/train2017.txt # 118287 images

val: ./coco/val2017.txt # 5000 images

test: ./coco/test-dev2017.txt # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

# number of classes

nc: 80

# class names

names: [ 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

'hair drier', 'toothbrush' ]

改后:

# COCO 2017 dataset http://cocodataset.org

# download command/URL (optional)

下面这句话我注了,因为没用他的数据集

# download: bash ./scripts/get_coco.sh

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/]

train: ./data/train/images # 118287 images

val: ./data/val/images # 5000 images

test: ./data/val/images # 20288 of 40670 images, submit to https://competitions.codalab.org/competitions/20794

# number of classes

nc: 1

# class names

names: [ 'crack' ]

修改’./cfg/training/yolov7.yaml’

主要是修改这个类别数

# parameters

nc: 1 # number of classes

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

修改train.py

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--weights', type=str, default='./weights/yolov7.pt', help='initial weights path')

parser.add_argument('--cfg', type=str, default='./cfg/training/yolov7.yaml', help='model.yaml path')

parser.add_argument('--data', type=str, default='data/coco.yaml', help='data.yaml path')

parser.add_argument('--hyp', type=str, default='data/hyp.scratch.p5.yaml', help='hyperparameters path')

parser.add_argument('--epochs', type=int, default=300)

parser.add_argument('--batch-size', type=int, default=12, help='total batch size for all GPUs')

parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='[train, test] image sizes')

parser.add_argument('--rect', action='store_true', help='rectangular training')

parser.add_argument('--resume', nargs='?', const=True, default=False, help='resume most recent training')

parser.add_argument('--nosave', action='store_true', help='only save final checkpoint')

parser.add_argument('--notest', action='store_true', help='only test final epoch')

parser.add_argument('--noautoanchor', action='store_true', help='disable autoanchor check')

parser.add_argument('--evolve', action='store_true', help='evolve hyperparameters')

parser.add_argument('--bucket', type=str, default='', help='gsutil bucket')

parser.add_argument('--cache-images',action='store_true', help='cache images for faster training')

parser.add_argument('--image-weights', action='store_true', help='use weighted image selection for training')

parser.add_argument('--device', default='0', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--multi-scale', action='store_true', help='vary img-size +/- 50%%')

parser.add_argument('--single-cls', action='store_true', help='train multi-class data as single-class')

parser.add_argument('--adam', action='store_true', help='use torch.optim.Adam() optimizer')

parser.add_argument('--sync-bn', action='store_true', help='use SyncBatchNorm, only available in DDP mode')

parser.add_argument('--local_rank', type=int, default=-1, help='DDP parameter, do not modify')

parser.add_argument('--workers', type=int, default=8, help='maximum number of dataloader workers')

parser.add_argument('--project', default='runs/train', help='save to project/name')

parser.add_argument('--entity', default=None, help='W&B entity')

parser.add_argument('--name', default='exp', help='save to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--quad', action='store_true', help='quad dataloader')

parser.add_argument('--linear-lr', action='store_true', help='linear LR')

parser.add_argument('--label-smoothing', type=float, default=0.0, help='Label smoothing epsilon')

parser.add_argument('--upload_dataset', action='store_true', help='Upload dataset as W&B artifact table')

parser.add_argument('--bbox_interval', type=int, default=-1, help='Set bounding-box image logging interval for W&B')

parser.add_argument('--save_period', type=int, default=-1, help='Log model after every "save_period" epoch')

parser.add_argument('--artifact_alias', type=str, default="latest", help='version of dataset artifact to be used')

opt = parser.parse_args()

修改了’–weights’,‘–cfg’,‘–data’,‘–hyp’,‘–epochs’,‘–device’ 开始训练

python train.py

Namespace(adam=False, artifact_alias='latest', batch_size=12, bbox_interval=-1, bucket='', cache_images=False, cfg='./cfg/training/yolov7.yaml', data='data/coco.yaml', device='0', entity=None, epochs=300, evolve=False, exist_ok=False, global_rank=-1, hyp='data/hyp.scratch.p5.yaml', image_weights=False, img_size=[640, 640], label_smoothing=0.0, linear_lr=False, local_rank=-1, multi_scale=False, name='exp', noautoanchor=False, nosave=False, notest=False, project='runs/train', quad=False, rect=False, resume=False, save_dir='runs/train/exp2', save_period=-1, single_cls=False, sync_bn=False, total_batch_size=12, upload_dataset=False, weights='./weights/yolov7.pt', workers=8, world_size=1)

[34m[1mtensorboard: [0mStart with 'tensorboard --logdir runs/train', view at http://localhost:6006/

[34m[1mhyperparameters: [0mlr0=0.01, lrf=0.1, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.3, cls_pw=1.0, obj=0.7, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.2, scale=0.9, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.15, copy_paste=0.0, paste_in=0.15

[34m[1mwandb: [0mInstall Weights & Biases for YOLOR logging with 'pip install wandb' (recommended)

Model Summary: 415 layers, 37196556 parameters, 37196556 gradients

Transferred 552/566 items from ./weights/yolov7.pt

Scaled weight_decay = 0.00046875

Optimizer groups: 95 .bias, 95 conv.weight, 98 other

[34m[1mtrain: [0mScanning 'data/train/labels.cache' images and labels... 748 found, 0 missing, 0 empty, 0 corrupted: 100%|██████████| 748/748 [00:00<?, ?it/s]

[34m[1mtrain: [0mScanning 'data/train/labels.cache' images and labels... 748 found, 0 missing, 0 empty, 0 corrupted: 100%|██████████| 748/748 [00:00<?, ?it/s]

[34m[1mval: [0mScanning 'data/val/labels.cache' images and labels... 188 found, 0 missing, 0 empty, 0 corrupted: 100%|██████████| 188/188 [00:00<?, ?it/s]

[34m[1mautoanchor: [0mAnalyzing anchors... anchors/target = 3.42, Best Possible Recall (BPR) = 1.0000

[34m[1mval: [0mScanning 'data/val/labels.cache' images and labels... 188 found, 0 missing, 0 empty, 0 corrupted: 100%|██████████| 188/188 [00:00<?, ?it/s]

Image sizes 640 train, 640 test

Using 4 dataloader workers

Logging results to runs/train/exp2

Starting training for 300 epochs...

推理

修改detect.py

parser = argparse.ArgumentParser()

parser.add_argument('--weights', nargs='+', type=str, default='./runs/train/exp3/weights/best.pt', help='model.pt path(s)')

parser.add_argument('--source', type=str, default='./data/val/images', help='source') # file/folder, 0 for webcam

parser.add_argument('--img-size', type=int, default=640, help='inference size (pixels)')

parser.add_argument('--conf-thres', type=float, default=0.25, help='object confidence threshold')

parser.add_argument('--iou-thres', type=float, default=0.45, help='IOU threshold for NMS')

parser.add_argument('--device', default='0', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--view-img', action='store_true', help='display results')

parser.add_argument('--save-txt', action='store_true', help='save results to *.txt')

parser.add_argument('--save-conf', action='store_true', help='save confidences in --save-txt labels')

parser.add_argument('--nosave', action='store_true', help='do not save images/videos')

parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --class 0, or --class 0 2 3')

parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')

parser.add_argument('--augment', action='store_true', help='augmented inference')

parser.add_argument('--update', action='store_true', help='update all models')

parser.add_argument('--project', default='runs/detect', help='save results to project/name')

parser.add_argument('--name', default='exp', help='save results to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--no-trace', action='store_true', help='don`t trace model')

opt = parser.parse_args()

print(opt)

#check_requirements(exclude=('pycocotools', 'thop'))

修改了’–weights’,‘–source’,‘–device’, 开始推理

python detect.py

结果保存在runs/detect/下的exp里