Tanzu Kubernetes Grid (TKG) 是 Tanzu 作为产品家族运行的基石VMware 的 Kubernetes 企业发行版本,可以在私有云和公有云多种云环境中部署,为用户提供一致的 Kubernetes 使用体验。为满足学习、开发、测试、生产部署等多样化需求,VMware 也推出了TKG 相应的社区版本TCE (TanzuCommunity Edition),用相应的开源组件代替 TKG 中型企业组件。

VMware Tanzu 社区版(Tanzu Community Edition,简称TCE)它功能齐全,易于管理 Kubernetes 适合学习者和用户的平台。它是社区免费支持的 VMware Tanzu 本地工作站或云上可在几分钟内安装和配置开源发行版。该项目支持创建应用平台。它利用 Cluster API 提供 Kubernetes 对集群进行声明部署和管理。

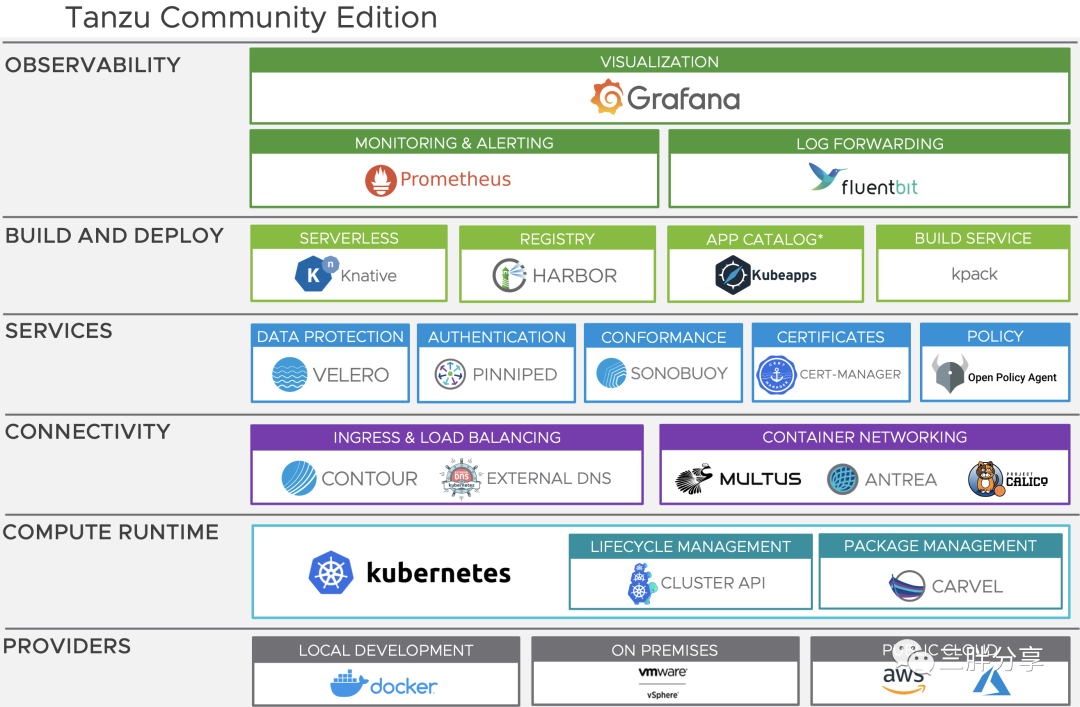

Kubernetes 这是我们安排工作负荷的基础。有了这个基础,VMware Tanzu 支持集群运行的应用程序的平台包可以安装在社区版本中。VMware Tanzu 社区版是通过提供一组验证组件来实现的。

此外,它还允许您使用自己的组件添加或更换这些组件。这种灵活性可以满足应用程序平台的独特生产要求,而无需从头开始。在帮助下 VMware Tanzu 社区版,云原生从业者可以在社区支持的环境中轻松独立学习、评估或使用 Kubernetes 还有其他云原生技术。

https://github.com/vmware-tanzu/community-edition

https://tanzucommunityedition.io/

TCE (Tanzu Community Edition) 架构

Tanzu社区版由各种组件组成,支持 Kubernetes 集群的引导和管理,以及各种平台服务的运营。以下是详细介绍TCE架构:

1

每个子命令都是 Tanzu 社区版本(TCE)提供相应的功能。该功能的范围可以从创建集群到管理在集群中运行的软件。子命令 tanzu 是客户端系统上托管的独立静态二进制文件。这实现了一个可插入的架构,可以独立添加、删除和更新插件。该tanzu该命令预计将安装在机器的路径上。每个子命令都应该安装${XDG_DATA_HOME}/tanzu-cli 。这种关系如下所示。

Tanzu社区版附带TanzuCLI一组精选插件。有些插件可能位于 vmware-tanzu/community-edition其他插件可能位于存储库中vmware-tanzu/tanzu-framework中。vmware-tanzu/tanzu-framework 多个插件可用于中间插件 Tanzu 版本。位于 vmware-tanzu/community-edition 插件只在中间 Tanzu 使用社区版。vmware-tanzu/community-edition插件可以升级(移动)到 vmware-tanznu/tanzu-framework。此举不影响 Tanzu 社区版用户;它只会影响插件的贡献者。此外,插件可能存在于vmware-tanzu/community-edition和 vmware-tanzu/tanzu-framework 外部存储库

2

托管集群由管理集群部署和管理tanzu management-cluster create当命令运行时bootstrap创建一个主机 bootstrap用于创建管理集群的集群,如下图所示

建立管理集群后,bootstrap集群将执行所有管理对象到管理集群的移动。管理集群负责管理自己和创建的任何新集群。管理集群管理的这些新集群称为工作负载集群。这种端到端的关系显示在下图中。

托管集群是一理集群和N工作负荷集群的部署模型。管理集群为 Tanzu 提供管理和操作。它运行。 Cluster-API,用于管理工作负载集群和多集群服务。工作负载集群是开发人员的工作负载运行的地方。

创建管理集群时,将在本地计算机上创建kind bootstrap集群。这是基础Kind的集群,通过Docker运行。bootstrap集群在指定的提供程序上创建管理集群。然后将目标环境中如何管理集群的信息转移到管理集群。到目前为止,当地bootstrap删除集群。

管理集群现在可以创建工作负荷集群。工作负荷集群由管理集群部署。工作负荷集群用于运行您的应用程序工作负荷。 Tanzu CLI 部署工作负载集群。

3

独立集群部署模式提供适合开发/测试环境的单节点本地工作站集群。它需要最少的本地资源,部署速度快。它支持多个集群的运行。默认情况下,独立集群将自动安装 Tanzu 社区版软件包存储库

为开发和实验提供独立集群Tanzu环境。默认情况下,他们通过安装Tanzu 件的kind在当地运行。独立集群提供适合开发/测试环境的单节点本地工作站集群。它需要最少的本地资源,部署速度快。它支持多个集群的运行。

独立集群定位与Docker desktop ,Minikube 竞争桌面市场,培养用户的使用习惯。

4

Tanzu 社区版通过Tanzu CLI 为用户提供软件包管理。包管理定义为发现、安装、升级和删除Tanzu运行在集群上的软件。每个包都使用carvel tools 创建的,并遵循我们的packaging流程。包装被放入一个单独的包中,称为包装存储和推送到一致 OCI 镜像仓库。

在 Tanzu 集群中, kapp-controller一直在监控包存储库。当集群被告知包存储库(可能通过tanzu package repository命令)时,kapp-controller 这种关系如下所示。

使用集群中可用的软件包,用户 tanzu 各种软件包都可以安装。在集群中创建 Package Install 它会指示资源 kapp-controller 在集群中下载软件包并安装软件。此过程如下所示。

注:如果部署独立集群,默认安装将自动安装Tanzu社区版软件包存储库tce-repo”

扩大了软件包管理 Tanzu 社区版的功能。 Tanzu CLI 发现和部署包。Tanzu 包是 Kubernetes 配置及其相关软件容器图像的聚合,形成版本化、可分发的捆绑包,可部署为OCI 容器图像。安装软件包 Tanzu集群中。

用户管理包:部署到集群中,包的生命周期独立于集群管理。核心包:部署到集群中,通常在集群启动后。生命周期是集群管理的一部分。包装仓库 包装仓库是包的集合。包装仓库定义元数据信息,使您能够在集群上找到、安装、管理和升级包。在将包部署到集群之前,必须通过包装仓库找到。

包装仓库是由的 Tanzu 社区版 kapp-controller 处理的 Kubernetes 集合自定义资源。 Linux 类似于包装仓库,Tanzu 可以通过声明定义元数据信息,使在运行集群中找到、安装、管理和升级软件包。

Tanzu社区版提供了一个名为包装仓库的版本tce-repo,它提供了开始 Kubernetes 构建应用平台所需的包集。您可以创建自己的软件包存储库来分发不同的软件。

5

Tanzu默认使用社区版kube-vip 控制节点的高可用性 (也支持用NSX AVI进行替换)

kube-vip 可以在你的控制平面节点上提供一个 Kubernetes 原生的 HA 负载平衡,无需在外部设置 HAProxy 和 Keepalived 实现集群的高可用性。

kube-vip 是一个为 Kubernetes 在集群内外提供高可用性和负载平衡的开源项目 Vmware 的 Tanzu 已在项目中使用 kube-vip 替换了用于 vSphere 部署的 HAProxy 负载均衡器,我们将先了解这篇文章 kube-vip 如何用于 Kubernetes 控制平面的高可用性和负载平衡功能。

Kube-Vip 最初是为 Kubernetes 提供控制平面 HA 随着时间的推移,解决方案已经发展成合并相同的功能Kubernetes的 LoadBalancer 类型的 Service 中了。

当前TCE 没有使用kube-vip发布LoadBalancer 类型的Service。

项目地址

https://github.com/kube-vip/kube-vip

https://kube-vip.chipzoler.dev/docs/

测试拓扑

部署步骤

托管集群方式部署步骤

1

首先需要下载Tanzu CLi,可以从以下地址下载,当前最新版本为0.12.1

https://github.com/vmware-tanzu/community-edition/releases/download/v0.12.1/tce-linux-amd64-v0.12.1.tar.gz

另外,托管集群模式需要使用 OVF 模版,VMware Customer Connect 帐户才能下载 OVA,请在此处注册,模版可以到TKG项目下进行下载最新的

https://customerconnect.vmware.com/account-registration

2

部署托管集群,需要准备bootstrap 主机,安装向导会临时构建bootstrap本地集群,用于创建管理集群。建议采用centos7.x vcpu>=2 内存>=4g

3

配置过程参考 拙文 《Tanzu学习系列之TKGm 1.4 for vSphere 快速部署 》

4

[root@tanzu-cli-tce ~]# curl -LO https://dl.k8s.io/release/v1.20.1/bin/linux/amd64/kubectl

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 154 100 154 0 0 161 0 --:--:-- --:--:-- --:--:-- 161

100 38.3M 100 38.3M 0 0 9779k 0 0:00:04 0:00:04 --:--:-- 24.6M

[root@tanzu-cli-tce ~]# sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

# kubectl version

Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.1", GitCommit:"c4d752765b3bbac2237bf87cf0b1c2e307844666", GitTreeState:"clean", BuildDate:"2020-12-18T12:09:25Z", GoVersion:"go1.15.5", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?5

1) 解压下载的tar xzvf tce-linux-amd64-v0.12.1.tar.gz

默认是非root账户进行安装可以通过解压之后的 install.sh文件 ,去掉!= "true"的 !,允许root用户进行安装

ALLOW_INSTALL_AS_ROOT="${ALLOW_INSTALL_AS_ROOT:-""}"

if [[ "$EUID" -eq 0 && "${ALLOW_INSTALL_AS_ROOT}" = "true" ]]; then

error_exit "Do not run this script as root"

fi[root@tanzu-cli-tce tce-linux-amd64-v0.12.1]# ./install.sh

+ set +x

====================================

Installing Tanzu Community Edition

====================================

Installing tanzu cli to /usr/local/bin/tanzu

Checking for required plugins...

Installing plugin 'apps:v0.6.0'

Installing plugin 'builder:v0.11.4'

Installing plugin 'cluster:v0.11.4'

Installing plugin 'codegen:v0.11.4'

Installing plugin 'conformance:v0.12.1'

Installing plugin 'diagnostics:v0.12.1'

Installing plugin 'kubernetes-release:v0.11.4'

Installing plugin 'login:v0.11.4'

Installing plugin 'management-cluster:v0.11.4'

Installing plugin 'package:v0.11.4'

Installing plugin 'pinniped-auth:v0.11.4'

Installing plugin 'secret:v0.11.4'

Installing plugin 'unmanaged-cluster:v0.12.1'

Successfully installed all required plugins

✔ successfully initialized CLI

Installation complete!2)安装完成之后,查看当前Tanzucli plugin

[root@tanzu-cli-tce tce-linux-amd64-v0.12.1]# /usr/local/bin/tanzu version

version: v0.11.4

buildDate: 2022-05-17

sha: a9b8f3a

[root@tanzu-cli-tce tce-linux-amd64-v0.12.1]# tanzu plugin list

NAME DESCRIPTION SCOPE DISCOVERY VERSION STATUS

apps Applications on Kubernetes Standalone default-local v0.6.0 installed

builder Build Tanzu components Standalone default-local v0.11.4 installed

cluster Kubernetes cluster operations Standalone default-local v0.11.4 installed

codegen Tanzu code generation tool Standalone default-local v0.11.4 installed

conformance Run Sonobuoy conformance tests against clusters Standalone default-local v0.12.1 installed

diagnostics Cluster diagnostics Standalone default-local v0.12.1 installed

kubernetes-release Kubernetes release operations Standalone default-local v0.11.4 installed

login Login to the platform Standalone default-local v0.11.4 installed

management-cluster Kubernetes management cluster operations Standalone default-local v0.11.4 installed

package Tanzu package management Standalone default-local v0.11.4 installed

pinniped-auth Pinniped authentication operations (usually not directly invoked) Standalone default-local v0.11.4 installed

secret Tanzu secret management Standalone default-local v0.11.4 installed

unmanaged-cluster Deploy and manage single-node, static, Tanzu clusters. Standalone default-local v0.12.1 installed6

备注:安装过程中会注入到集群,用于ssh免密方式登陆集群节点

[root@tanzu-cli-tce ~]# ssh-keygen -t rsa -b 4096 -C "tkg@tanzu.com"

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:c79LZWg8QCGWOPErhnkbEpa+2YtSBNHjtkoisAsnX2A tkg@tanzu.com

The key's randomart image is:

+---[RSA 4096]----+

| .o .oo.o. |

| . o.oo.o |

| o+. .. . |

|. Eo++ . o . |

|.o +=.= S . = o |

|* o +B + o o + |

|oB +o o o |

|. + . . . . |

| .. . o. |

+----[SHA256]-----+

# cat /root/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDCCsTJRjEiWsjs9SJ+5s4PDLYpaE25R9i1Ihh+PUdczFdOYIjsm50BYB2AvjY+u4Du7kCS+S3oZqom+WJ8DG1ljoDJ+3LW3INu0eg85v5Qkh+txbrYLArW4vZL5ON4kd9TZyZFpStKph0J8mqHgLEFnzHCF1xs3E6/Y4gwj1nXe80PFpl9CgiDz0ESbKp71KUJImIBlhEqADxnnSAmWvuyT6y2PbnvBFQO8s2AmGrzNEOKec0qyMWsrW7hYsmnquTXPuorGSVxre7OlEzKxgP2CstYvcdeEUeHUGpvQdfrzn6f/xa1PxPx+2x1mlpsLiYRMfFe//2RHGN1nEH4hcD+GG9X6NlseqHClScXKDD13CkZP6LL3cJwv661PBXPbCxMjdgUmZj3Y+sClwTU0gEw1KHnnTZR2gosCA6t/iV3d25BoqjXGfpVIu9Tlh3xZo8mNSDcFk4kEY9XlB7mRTUvUAGSWQsWu8Fq0U5j2IrUr9Ir/SM7fiSGs7PNGNzrgtkbAO1u2f5zCUjDJ/0SgV1Aoc55sgbXrzMBC03IvDqX3hpK6RPcIuMsLtEQGEbQg+lshgU3f4xX+CGXTOAj9PaPe5diMP/gF+CNgG0TL36B0U2xlizOMQj32lux9f19lEpcw7OJbyB82DpeY10gbrtlkxCyMSTuvT2WTNvMqSbWrw== tkg@tanzu.com7

1) 在bootstrap主机 启动安装向导

# tanzu management-cluster create --ui --bind 10.105.148.83:8088 --browser none --timeout 2h0m0s

Validating the pre-requisites...

Serving kickstart UI at http://10.105.148.83:80882)使用浏览器打开UIhttp://10.105.148.83:8088,选择部署在VMware vSphere 平台

3)输入vcenter server IP,用户名和密码,把bootstrap主机的ssh的 /root/.ssh/id_rsa.pub 内容输入到 SSH PUBLIC KEY 框

4) 选择TCE 管理集群模式,测试方便,选择开发模式,选择kube-vip 模式 (如果已经购买AVI,可以选择AVI)作为控制节点高可用方案

5)没有购买AVI,可以直接下一步

6)默认下一步

7)vsphere资源选择

8) TCE 集群集群网络设置

9)认证模式,本次测试选择不配置

备注:如果需要配置可以参考拙文《Tanzu学习系列之TKGm 1.4 for vSphere 组件集成(三)》

10)选择OS image 镜像,是前文下载的OVA 模式导入之后转成的模版

备注:部署模版

11) Review 前序输入配置信息,并拷贝CLI命令行

12) 用上一步拷贝的命令行方式创建TCE 管理集群集群,设置日志级别为v9

注意交互式输入

[root@tanzu-cli-tce ~]# tanzu management-cluster create tcem --file /root/.config/tanzu/tkg/clusterconfigs/8rr2wgggqy.yaml -v 9

compatibility file (/root/.config/tanzu/tkg/compatibility/tkg-compatibility.yaml) already exists, skipping download

BOM files inside /root/.config/tanzu/tkg/bom already exists, skipping download

CEIP Opt-in status: false

Validating the pre-requisites...

vSphere 7.0 with Tanzu Detected.

You have connected to a vSphere 7.0 with Tanzu environment that includes an integrated Tanzu Kubernetes Grid Service which

turns a vSphere cluster into a platform for running Kubernetes workloads in dedicated resource pools. Configuring Tanzu

Kubernetes Grid Service is done through the vSphere HTML5 Client.

Tanzu Kubernetes Grid Service is the preferred way to consume Tanzu Kubernetes Grid in vSphere 7.0 environments. Alternatively you may

deploy a non-integrated Tanzu Kubernetes Grid instance on vSphere 7.0.

Note: To skip the prompts and directly deploy a non-integrated Tanzu Kubernetes Grid instance on vSphere 7.0, you can set the 'DEPLOY_TKG_ON_VSPHERE7' configuration variable to 'true'

Do you want to configure vSphere with Tanzu? [y/N]: n

Would you like to deploy a non-integrated Tanzu Kubernetes Grid management cluster on vSphere 7.0? [y/N]: y

Deploying TKG management cluster on vSphere 7.0 ...

Identity Provider not configured. Some authentication features won't work.

Using default value for CONTROL_PLANE_MACHINE_COUNT = 1. Reason: CONTROL_PLANE_MACHINE_COUNT variable is not set

Using default value for WORKER_MACHINE_COUNT = 1. Reason: WORKER_MACHINE_COUNT variable is not set

Checking if VSPHERE_CONTROL_PLANE_ENDPOINT 10.105.148.84 is already in use

Setting up management cluster...

Validating configuration...

Using infrastructure provider vsphere:v1.0.3

Generating cluster configuration...

Using default value for CONTROL_PLANE_MACHINE_COUNT = 1. Reason: CONTROL_PLANE_MACHINE_COUNT variable is not set

Using default value for WORKER_MACHINE_COUNT = 1. Reason: WORKER_MACHINE_COUNT variable is not set

Setting up bootstrapper...

Fetching configuration for kind node image...

kindConfig:

&{

{Cluster kind.x-k8s.io/v1alpha4} [{ map[] [{/var/run/docker.sock /var/run/docker.sock false false }] [] [] []}] {ipv4 0 100.96.0.0/11 100.64.0.0/13 false } map[] map[] [apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

imageRepository: projects.registry.vmware.com/tkg

etcd:

local:

imageRepository: projects.registry.vmware.com/tkg

imageTag: v3.5.2_vmware.3

dns:

type: CoreDNS

imageRepository: projects.registry.vmware.com/tkg

imageTag: v1.8.4_vmware.9] [] [] []}

Creating kind cluster: tkg-kind-ca54ujvv4sotkob7j3r0

Creating cluster "tkg-kind-ca54ujvv4sotkob7j3r0" ...

Ensuring node image (projects.registry.vmware.com/tkg/tanzu-framework-release/kind/node:v1.22.8_vmware.1-tkg.1_v0.11.1) ...

Pulling image: projects.registry.vmware.com/tkg/tanzu-framework-release/kind/node:v1.22.8_vmware.1-tkg.1_v0.11.1 ...13)TCE管理集群创建成功之后,以下命令获得管理集群kubeconfig文件,并切换到管理集群的context进行查看

# 获取管理集群kubeconfig文件

[root@tanzu-cli-tce cluster]# tanzu mc kubeconfig get --admin

Credentials of cluster 'tcem' have been saved

You can now access the cluster by running 'kubectl config use-context tcem-admin@tcem'

[root@tanzu-cli-tce cluster]# kubectl config use-context tcem-admin@tcem

Switched to context "tcem-admin@tcem".

# 查看集群

[root@tanzu-cli-tce cluster]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tcem-control-plane-7ws9r Ready control-plane,master 11h v1.22.8+vmware.1 10.105.148.162 10.105.148.162 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

tcem-md-0-89c575f99-mpvrx Ready <none> 11h v1.22.8+vmware.1 10.105.148.166 10.105.148.166 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

[root@tanzu-cli-tce cluster]#8

创建工作集群yaml配置文件,模版来自创建TCE管理集群的配置文件

本次测试为 /root/.config/tanzu/tkg/clusterconfigs/8rr2wgggqy.yaml

# 编辑工作集群yaml文件

[root@tanzu-cli-tce cluster]# cat w01.yaml

CLUSTER_NAME: tcew01

CLUSTER_PLAN: prod

CNI: antrea

CONTROL_PLANE_MACHINE_COUNT: 3

WORKER_MACHINE_COUNT: 3

VSPHERE_CONTROL_PLANE_DISK_GIB: "20"

VSPHERE_CONTROL_PLANE_ENDPOINT: 10.105.148.85

VSPHERE_CONTROL_PLANE_MEM_MIB: "4096"

VSPHERE_CONTROL_PLANE_NUM_CPUS: "2"

VSPHERE_DATACENTER: /WestDC

VSPHERE_DATASTORE: /WestDC/datastore/vsanDatastore

VSPHERE_FOLDER: /WestDC/vm

VSPHERE_INSECURE: "true"

VSPHERE_NETWORK: /WestDC/network/test

VSPHERE_PASSWORD: <encoded:Vk13YXJlMTIzIQ==>

VSPHERE_RESOURCE_POOL: /WestDC/host/westcluster/Resources/west-tkgm

VSPHERE_SERVER: 10.105.146.50

VSPHERE_SSH_AUTHORIZED_KEY: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDCCsTJRjEiWsjs9SJ+5s4PDLYpaE25R9i1Ihh+PUdczFdOYIjsm50BYB2AvjY+u4Du7kCS+S3oZqom+WJ8DG1ljoDJ+3LW3INu0eg85v5Qkh+txbrYLArW4vZL5ON4kd9TZyZFpStKph0J8mqHgLEFnzHCF1xs3E6/Y4gwj1nXe80PFpl9CgiDz0ESbKp71KUJImIBlhEqADxnnSAmWvuyT6y2PbnvBFQO8s2AmGrzNEOKec0qyMWsrW7hYsmnquTXPuorGSVxre7OlEzKxgP2CstYvcdeEUeHUGpvQdfrzn6f/xa1PxPx+2x1mlpsLiYRMfFe//2RHGN1nEH4hcD+GG9X6NlseqHClScXKDD13CkZP6LL3cJwv661PBXPbCxMjdgUmZj3Y+sClwTU0gEw1KHnnTZR2gosCA6t/iV3d25BoqjXGfpVIu9Tlh3xZo8mNSDcFk4kEY9XlB7mRTUvUAGSWQsWu8Fq0U5j2IrUr9Ir/SM7fiSGs7PNGNzrgtkbAO1u2f5zCUjDJ/0SgV1Aoc55sgbXrzMBC03IvDqX3hpK6RPcIuMsLtEQGEbQg+lshgU3f4xX+CGXTOAj9PaPe5diMP/gF+CNgG0TL36B0U2xlizOMQj32lux9f19lEpcw7OJbyB82DpeY10gbrtlkxCyMSTuvT2WTNvMqSbWrw== tkg@tanzu.com

VSPHERE_TLS_THUMBPRINT: ""

VSPHERE_USERNAME: administrator@vsphere.local

VSPHERE_WORKER_DISK_GIB: "20"

VSPHERE_WORKER_MEM_MIB: "4096"

VSPHERE_WORKER_NUM_CPUS: "2"

# 创建工作集群

tanzu cluster create -f w01.yaml -v 9

# 集群创建成功,查看集群

# tanzu cluster list

NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES ROLES PLAN

tcew01 default running 3/3 3/3 v1.22.8+vmware.1 <none> prod

# 获取工作集群kubeconfig文件

[root@tanzu-cli-tce cluster]# tanzu cluster kubeconfig get tcew01 --admin

Credentials of cluster 'tcew01' have been saved

You can now access the cluster by running 'kubectl config use-context tcew01-admin@tcew01'

# 切换到工作集群context

[root@tanzu-cli-tce cluster]# kubectl config use-context tcew01-admin@tcew01

Switched to context "tcew01-admin@tcew01".

# 查看工作集群状态

[root@tanzu-cli-tce cluster]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tcew01-control-plane-2xtgz Ready control-plane,master 10h v1.22.8+vmware.1 10.105.148.172 10.105.148.172 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

tcew01-control-plane-8gwgf Ready control-plane,master 10h v1.22.8+vmware.1 10.105.148.167 10.105.148.167 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

tcew01-control-plane-wwzzp Ready control-plane,master 10h v1.22.8+vmware.1 10.105.148.171 10.105.148.171 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

tcew01-md-0-d59d7d994-tqnrm Ready <none> 10h v1.22.8+vmware.1 10.105.148.168 10.105.148.168 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

tcew01-md-1-7b7d98b898-qb6kw Ready <none> 10h v1.22.8+vmware.1 10.105.148.169 10.105.148.169 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.9

tcew01-md-2-5bfdc65b44-zt7ds Ready <none> 10h v1.22.8+vmware.1 10.105.148.170 10.105.148.170 Ubuntu 20.04.4 LTS 5.4.0-107-generic containerd://1.5.99

TCE 作为TKG开源版,既可以使用企业级负载均衡NSX AVI,也可以使用免费的负载均衡 MetalLB 进行替换

MetalLB 是一种在 Kubernetes 集群中实现对 LoadBalancer 服务类型支持的简单方法。它可以与大多数网络设置很好地集成在一起。

kubernetes 本身并没有实现 LoadBalancer;如果是公有云,可以使用云服务商提供的 provider;TKG 可以使用 NSX AVI 实现,而对于bare metal或者TCE来说,则可以使用 MetalLB 来达到相同的目的。

MetalLB 提供了两个功能:

地址分配:当创建 LoadBalancer Service 时,MetalLB 会为其分配 IP 地址。这个 IP 地址是从获取的。同样,当 Service 删除后,已分配的 IP 地址会重新回到地址库。

对外广播:分配了 IP 地址之后,需要让集群外的网络知道这个地址的存在。MetalLB 使用了标准路由协议实现:ARP、NDP 或者 BGP。

广播的方式有两种,第一种是Layer 2 模式,使用 ARP(ipv4)/NDP(ipv6) 协议;第二种是 BPG。

MetalLB 运行时有两种工作负载:

Controler:Deployment,用于监听 Service 的变更,分配/回收 IP 地址。

Speaker:DaemonSet,对外广播 Service 的 IP 地址。

Layer2 模式并不是真正意义上的负载均衡,因为流量都会先经过1个 node 后,再通过 kube-proxy 转给多个 end points。如果该 node 故障,MetalLB 会迁移 IP 到另一个 node,并重新发送免费 ARP 告知客户端迁移。现代操作系统基本都能正确处理免费 ARP,因此 failover 不会产生太大问题。

Layer2 模式更为通用,不需要用户有额外的设备;但由于Layer2 模式使用ARP/NDP,地址池分配需要跟客户端在同一子网,地址分配略为繁琐,建议作为测试使用。

BGP模式:BGP模式下,集群中所有node都会跟上联路由器建立BGP连接,并且会告知路由器应该如何转发service的流量。

BGP模式可以使MetaLB实现真正的LoadBalancer。

项目地址:

https://github.com/metallb/metallb

https://metallb.universe.tf/installation/

1)本次测试安装配置 MetalLB Layer 2模式

[root@tanzu-cli-tce cluster]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/namespace.yaml

namespace/metallb-system created

[root@tanzu-cli-tce cluster]# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller created2)配置MetalLB Layer 2 模式,设置地址范围,并应用配置文件

[root@tanzu-cli-tce cluster]# cat metalbl2.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 10.105.148.145-10.105.148.150

[root@tanzu-cli-tce cluster]# kubectl apply -f metalbl2.yaml

configmap/config created3)确认MetalLB 运行状态

[root@tanzu-cli-tce cluster]# kubectl get all -n metallb-system

NAME READY STATUS RESTARTS AGE

pod/controller-66445f859d-tcnnf 1/1 Running 0 41m

pod/speaker-9fsbh 1/1 Running 0 41m

pod/speaker-c84gk 1/1 Running 0 41m

pod/speaker-dn6h8 1/1 Running 0 41m

pod/speaker-pnv26 1/1 Running 0 41m

pod/speaker-sxzk5 1/1 Running 0 41m

pod/speaker-vst6z 1/1 Running 0 41m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 6 6 6 6 6 kubernetes.io/os=linux 41m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 1/1 1 1 41m

NAME DESIRED CURRENT READY AGE

replicaset.apps/controller-66445f859d 1 1 1 41m4)测试MetalLB Layer 2模式 LoadBalancer 功能

[root@tanzu-cli-tce cluster]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@tanzu-cli-tce cluster]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-wgqzg 1/1 Running 0 29s

[root@tanzu-cli-tce cluster]# kubectl expose deployment nginx --type=LoadBalancer --port=80

service/nginx exposed

[root@tanzu-cli-tce cluster]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 100.64.0.1 <none> 443/TCP 11h

nginx LoadBalancer 100.69.250.215 10.105.148.145 80:30138/TCP 5s独立集群方式Linux平台部署

Tanzu 社区版独立集群提供适用于开发/测试环境的单节点本地工作站集群。它需要最少的本地资源,并且部署速度很快。

Tanzu 社区版支持两个独立集群提供程序:Kind 和 minikube,支持部署在Linux,Mac,Windows 平台。

Kind 是默认的集群提供程序,默认包含在独立集群二进制文件中,只需要安装Docker。Minikube 是一个替代的集群提供者,如果你打算使用 minikube 作为你的集群提供者,你必须首先安装 minikube 和一个 minikube 支持的容器或虚拟机管理器,比如 Docker。

1) 在Linux平台安装独立集群(Linux 需要安装Docker引擎),本次测试在bootstrap主机上进行安装测试

[root@tanzu-cli-tce cluster]# tanzu unmanaged-cluster create tceu -v 9

📁 Created cluster directory

🧲 Resolving and checking Tanzu Kubernetes release (TKr) compatibility file

projects.registry.vmware.com/tce/compatibility

Downloaded to: /root/.config/tanzu/tkg/unmanaged/compatibility/projects.registry.vmware.com_tce_compatibility_v9

🔧 Resolving TKr

projects.registry.vmware.com/tce/tkr:v1.22.7-2

Downloaded to: /root/.config/tanzu/tkg/unmanaged/bom/projects.registry.vmware.com_tce_tkr_v1.22.7-2

Rendered Config: /root/.config/tanzu/tkg/unmanaged/tceu/config.yaml

Bootstrap Logs: /root/.config/tanzu/tkg/unmanaged/tceu/bootstrap.log

🔧 Processing Tanzu Kubernetes Release

🎨 Selected base image

projects.registry.vmware.com/tce/kind:v1.22.7

📦 Selected core package repository

projects.registry.vmware.com/tce/repo-12:0.12.0

📦 Selected additional package repositories

projects.registry.vmware.com/tce/main:0.12.0

📦 Selected kapp-controller image bundle

projects.registry.vmware.com/tce/kapp-controller-multi-pkg:v0.30.1

🚀 Creating cluster tceu

Cluster creation using kind!

❤️ Checkout this awesome project at https://kind.sigs.k8s.io

Base image downloaded

Cluster created

To troubleshoot, use:

kubectl ${COMMAND} --kubeconfig /root/.config/tanzu/tkg/unmanaged/tceu/kube.conf

📧 Installing kapp-controller

kapp-controller status: Running

📧 Installing package repositories

tkg-core-repository package repo status: Reconcile succeeded

🌐 Installing CNI

calico.community.tanzu.vmware.com:3.22.1

✅ Cluster created

🎮 kubectl context set to tceu

View available packages:

tanzu package available list

View running pods:

kubectl get po -A

Delete this cluster:

tanzu unmanaged delete tceu

[root@tanzu-cli-tce cluster]# tanzu unmanaged-cluster list

NAME PROVIDER STATUS

tceu kind Running2) 查看config配置文件

[root@tanzu-cli-tce cluster]# kubectl config view --minify

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://127.0.0.1:45406

name: kind-tceu

contexts:

- context:

cluster: kind-tceu

user: kind-tceu

name: kind-tceu

current-context: kind-tceu

kind: Config

preferences: {}

users:

- name: kind-tceu

user:

client-certificate-data: REDACTED

client-key-data: REDACTED3) 查看tanzu package repository

[root@tanzu-cli-tce cluster]# tanzu package repository list --all-namespaces

NAME REPOSITORY TAG STATUS DETAILS NAMESPACE

projects.registry.vmware.com-tce-main-0.12.0 projects.registry.vmware.com/tce/main 0.12.0 Reconcile succeeded tanzu-package-repo-global

tkg-core-repository projects.registry.vmware.com/tce/repo-12 0.12.0 Reconcile succeeded tkg-system

[root@tanzu-cli-tce cluster]# tanzu package available list

NAME DISPLAY-NAME SHORT-DESCRIPTION LATEST-VERSION

app-toolkit.community.tanzu.vmware.com App-Toolkit package for TCE Kubernetes-native toolkit to support application lifecycle 0.2.0

cartographer-catalog.community.tanzu.vmware.com Cartographer Catalog Reusable Cartographer blueprints 0.3.0

cartographer.community.tanzu.vmware.com Cartographer Kubernetes native Supply Chain Choreographer. 0.3.0

cert-injection-webhook.community.tanzu.vmware.com cert-injection-webhook The Cert Injection Webhook injects CA certificates and proxy environment variables into pods 0.1.1

cert-manager.community.tanzu.vmware.com cert-manager Certificate management 1.8.0

contour.community.tanzu.vmware.com contour An ingress controller 1.20.1

external-dns.community.tanzu.vmware.com external-dns This package provides DNS synchronization functionality. 0.10.0

fluent-bit.community.tanzu.vmware.com fluent-bit Fluent Bit is a fast Log Processor and Forwarder 1.7.5

fluxcd-source-controller.community.tanzu.vmware.com Flux Source Controller The source-controller is a Kubernetes operator, specialised in artifacts acquisition from external sources such as Git, Helm repositories and S3 buckets. 0.21.5

gatekeeper.community.tanzu.vmware.com gatekeeper policy management 3.7.1

grafana.community.tanzu.vmware.com grafana Visualization and analytics software 7.5.11

harbor.community.tanzu.vmware.com